New AI Guidance and Impact Factor Updates

. . . and a new winner in the "Best Car Name" category.

Greetings from our temporary journal headquarters in small-town Italy. There’s a lot of old stuff here, all of it beautiful in its own unique way (yes ha ha I’m old too). Yet the thing that’s struck me most is new: electric bikes are rapidly replacing the screaming, oil-burning 49cc two-stroke motor scooters that were so popular with the young crowd before. Piaggio Ape trikes, which likely put out more pollutants than 500 new compact cars, will hopefully be next to go, but that’s going to take awhile. In the meantime, I also discovered that Peugeot produced a car called the “Bipper Tepee”, which kept my eleven-year-old and me entertained for about 30 minutes through the Appennino Lucano, and only partly because it passed me on the downhill side. I could only wish, however, that it had been such a glorious green.

There are a lot of great car names in the world, but this one is now tops on my list. It made me think of the great work of Steve Klepper and all the variety there was in the nascent auto industry. See how I tried to tie that back to the journal? Not successfully?

Okay, back to things you care about. We have a couple of quick announcements first, then I’ll introduce our new guidance on artificial intelligence usage that will be posted online shortly. Finally, I’ll discuss the newly released 2024 Journal Impact Factors from Clarivate. Those of you who think I’m too metric-focused can tune out then, if I didn’t already lose you with the Bipper Tepee.

Dissertation Competition and Special Issue Deadline

The INFORMS Organization Science Dissertation Proposal Competition deadline is tomorrow (June 20), so if you were considering submitting, now would be the time to do so. It’s not technically a journal-based competition, but we coordinate on this great event. You can find the information on the link above, and the competition chair Michael Park (INSEAD) is already busy processing submissions.

The deadline for the special issue on Remote and Hybrid Work is July 15, so if you’re preparing to submit something, make sure you hit that deadline. We’ve got a great set of editors and are anticipating a strong submission pool. Like all our special issues now, this one is not a tournament for a limited number of slots and our editor team is processing papers at our usual efficient speed. I hope you’ll consider submitting any relevant work.

Artificial Intelligence at Organization Science

We’ve received quite a bit of feedback recently that we should provide better guidance on acceptable uses of artificial intelligence, and this is completely fair. We should have done so earlier. So here it is, version 1.0, in all its imperfect form:

Creating a specific AI “policy” is tricky for several reasons:

The technology is changing so rapidly that anything specific might be outdated in a few weeks.

We still don’t have great data on how people are using AI in their research or the review process, and any such data might be obsolete in a couple of weeks (see Point 1).

It’s incredibly hard to cleanly define the boundaries between acceptable (e.g., proofreading/copyediting) and unacceptable (e.g., generative writing) categories.

As with any new technology, there are equity concerns in defining its use.

Let’s examine Point 4. Copyediting in academic research has always carried equity concerns because copyeditors were expensive and thus unavailable to many. Similarly, native English speakers such as myself have an inherent advantage in writing in English over those for whom it is a second, third, or fourth language. This doesn’t always produce better results, but it might reduce time and effort costs, ceteris paribus. AI can dramatically reduce writing costs for non-native speakers of any language, so banning its use perpetuates language-based inequity. Yet widespread use for translation is problematic as well because we care about precision and nuance. This is particularly tough in languages like English where there might be five words meaning slightly different things, with roots in Latin, Greek, Old English, Norse, and other languages.

For all these reasons and more, we present this document as strong guidance, recognizing that it is incomplete, imprecise, and a snapshot in time. Where that guidance is closer to rules is for reviewers and editors, largely because of intellectual property concerns. As a reviewer, don’t ask AI to write your report, even if you give it all the main points. Just don’t do it. Perhaps in a year we’ll say something different, but for now it’s disallowed. Copyedit or proofread or adjust for tone, but never feed any paper material into an AI engine, even if you feel confident that your chatbot is sequestered from the public. Not only does this risk IP violations, but it also favors papers that conform to prior forms and standards.

We’ll continue to revise and adjust as we gather more data on usage. We know our guidance is not perfect, but we think it’s reasonable given what we currently have and know now.

2024 Impact Factor

As you know, each year Clarivate computes a bunch of fancy metrics to try to measure journal “impact” or importance, whatever those mean. Their primary measure, the two-year impact factor (IF), measures the average number of citations this year (2024) to all papers published in the prior two years (2022 and 2023). As of a couple of years ago, they define “published” by “showed up online”, so journals with long backlogs might have papers referenced as 2024 that are indexed as 2023. It’s a bit complicated, and never very satisfying. Ours went up this year again to 5.4 (2024), from 4.1 (2022) and 4.9 (2023) in the two previous years.1

It seems expected by some that I as Editor-in-Chief first publicly state that I don’t care about IF, because doing so would taint the purity of the scientific mission, but the reality is that IF matters because it is a noisy but real key performance indicator (KPI) of what’s getting read and referenced. So I do care about it. This is different than being a KPI of quality, however people define that. I wouldn’t change our portfolio of papers for that of any higher-cited journal in our field.

Like nearly all KPIs, IF is biased in many ways and can be gamed by journals that seek to boost it. I do see IF changes for us as roughly indicative of whether our papers are getting read and incorporated into future work more or less than before, which is a function of research quality, visibility, and relevance. It also matters because parts of the market say it matters. Will authors be appropriately recognized by their employers for the great work they publish here? At many places, IF or the categorization of the journal (A, B) as a function of IF can swing a tenure case one way or the other. So as Editor-in-Chief, I see it as important to support our authors. You might disagree, which is fine. I don’t, however, care about it enough to game it, because it’s not hard to do so if you want to. It’s easy, for example, to build strongly predictive machine learning models of future citations that clearly identify Lamar Pierce papers as being bad for impact factor. But we simply stand by the research that our senior editors accept, and work to make sure that others read and understand it for the value it has.

After all that ranting, the bottom line is that that Organization Science received its highest impact factor ever this year—5.4—despite some mechanical factors in the calculation working against us. Nearly all the other metrics went up.2 We’re really happy with this, since we think this trend reflects the growing visibility and performance of the journal with high-quality content that the journal has always published. IF is a lagging KPI. Consequently, most papers in this year’s 2024 IF were accepted before I became EIC, so credit is due to the prior editorial team for the quality of research that we believe is being increasingly recognized. We’ve been lucky to have that great content to promote, and to have a new stream of more recently submitted papers to join it.

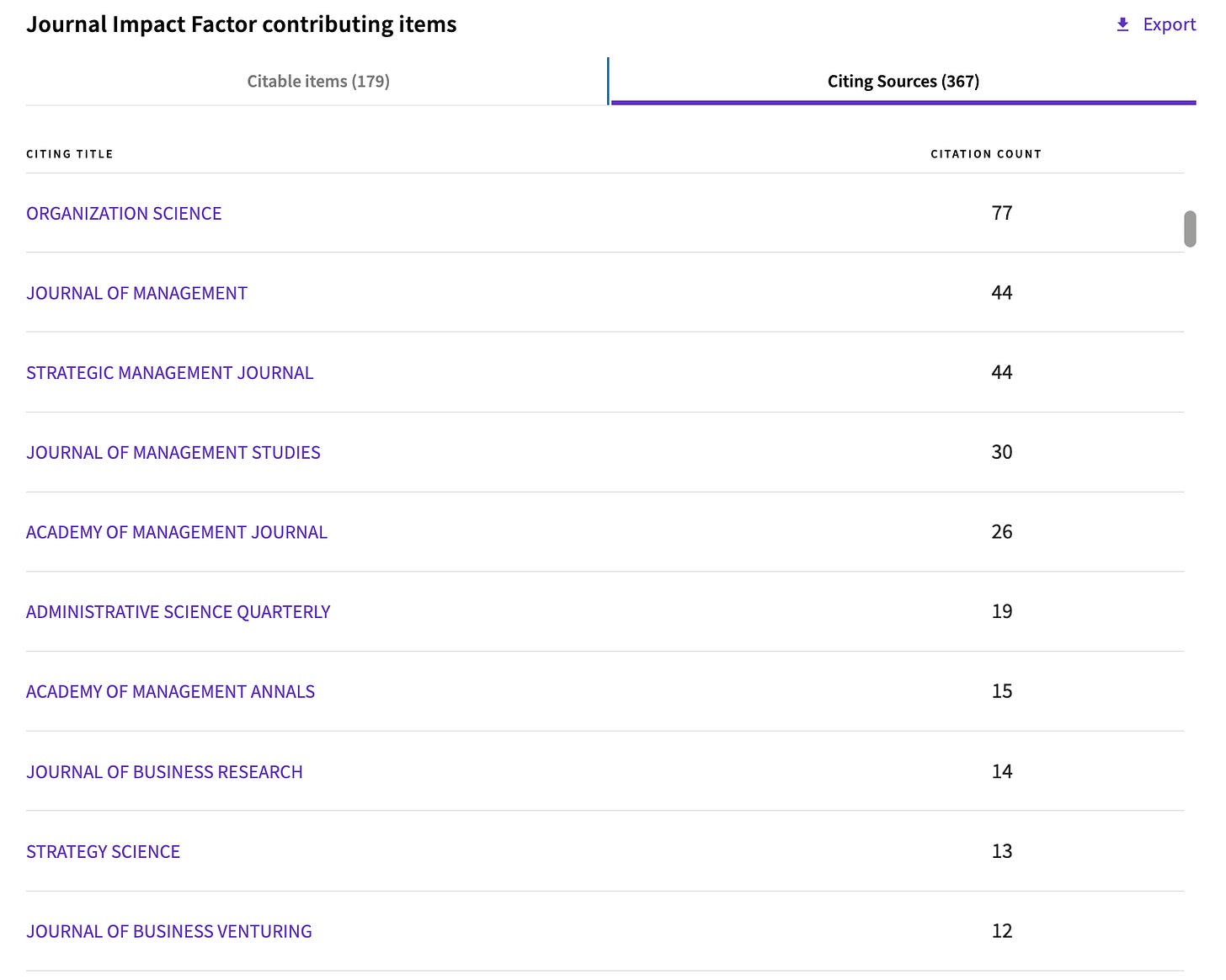

There’s a lot of interesting data in journal citation reports, so if your school has a Web of Science subscription you should be able to peruse them. Then maybe you can explain all the eigenfactor calculations to me. But for scholars considering submitting here at Organization Science, this is a snapshot of the journals that cited our papers the most in 2024. And yes, you can make some snarky remarks about us citing ourselves, but that’s the case with nearly every large journal (AMJ is a rare exception, where they are a close second, but they just get cited A LOT).

That’s all for today. We’ll be back early next week with more. I managed to get the one-eyed kitten outside to finally eat some tuna, so my day feels like a success.

-Lamar

Our IF should really be 5.5, but Clarivate decided to count two of our least cited papers twice. Oh wait, I’m not supposed to be this obsessed with metrics. . .

The only one that went down was “Recency”, which measures 2024 citations to 2024 publications, I guess as a leading indicator. The challenge here is of course that papers that come online in December are basically screwed. Plus there are issues of preprints, ect. . . As you can see, I’m very good at rationalizing away things that don’t look good. It’s a key skill for those in leadership positions.